This guy turned into such a cocksucker. Fuck Wordpress and fuck matt.

- 1 Post

- 136 Comments

3·12 days ago

3·12 days agoMan i feel that complaining about the free snacks 100%.

Covid was so hard for some of these kids because they had to fend for themselves during the work day while working from home. Constant complaints.

You and i would get along great i bet :D

41·12 days ago

41·12 days agoGet fucked, NSA

7·12 days ago

7·12 days agoI work in tech now, so I’m a lazy schlub. However, I’m also a college dropout out (English major) who had a ton of actual jobs in the past. Warehouse loading delivery trucks, worked in a cabinet shop, food service, etc. i

I think college grads who go into tech should have to work a normal job for at least a year before getting their tech job and making six figures right out of college.

Otherwise you end up with these entitled shitbags who complain that their company provided duck confit at lunch doesn’t have crispy enough skin (an actual thing that actually happened when i was at a big FANG company. Fucking unbelievable)

So even though I’m a techbro shitlord, i have respect for the people who work jobs.

378·19 days ago

378·19 days agoPirate a copy of windows 11 N. It’s the eu version that doesn’t have any of this dogshit in it.

3·21 days ago

3·21 days agolol what, a graph database for spreadsheet work?

281·27 days ago

281·27 days agoKagi is good. I’m very happy with it.

44·2 months ago

44·2 months agoDelete this entire post or shut up, bro. You’re being nuked with downvotes.

Chaos space marine from wathammer 40k

Updated post with link

Updated post with link

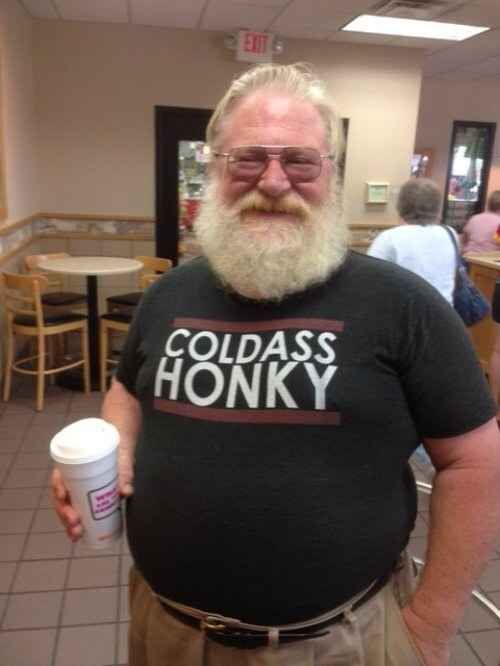

It’s not. I’m literally wearing it as we speak.

4·2 months ago

4·2 months agoThat’s not really… possible at this point. We have thousands of customers (some very large ones, like A——n and G—-e and Wal___t) with tens or hundreds of millions of users, and even at lowest traffic periods do 60k+ queries per second.

This is the same MySQL instance I wrote about a while ago that hit the 16TiB table size limit (due to ext4 file system limitations) and caused a massive outage; worst I’ve been involved in during my 26 year career.

Every day I am shocked at our scale, considering my company is only like 90 engineers.

43·2 months ago

43·2 months agoJust had to restart our main MySQL instance today. Had to do it at 6am since that’s the lowest traffic point, and boy howdy this resonates.

2 solid minutes of the stack throwing 500 errors until the db was back up.

111·2 months ago

111·2 months ago“Too fucking bad”

71·2 months ago

71·2 months agoRofl. My dad is WHY I do this.

21·2 months ago

21·2 months agoFair point. I usually just correct people when they are talking about cement concrete. I’ve never actually heard of “asphalt concrete”

I just inherited this quirk from my dad is all

17·2 months ago

17·2 months agoWell technically the tanks do heat the water that is already hot, to maintain the temp.

But I do get your point; I myself say that sometimes by accident.

I like and support Kagi. Whatever.