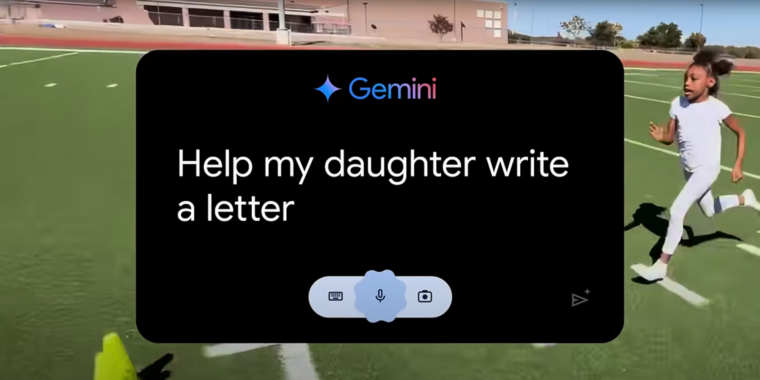

If you’ve watched any Olympics coverage this week, you’ve likely been confronted with an ad for Google’s Gemini AI called “Dear Sydney.” In it, a proud father seeks help writing a letter on behalf of his daughter, who is an aspiring runner and superfan of world-record-holding hurdler Sydney McLaughlin-Levrone.

“I’m pretty good with words, but this has to be just right,” the father intones before asking Gemini to “Help my daughter write a letter telling Sydney how inspiring she is…” Gemini dutifully responds with a draft letter in which the LLM tells the runner, on behalf of the daughter, that she wants to be “just like you.”

I think the most offensive thing about the ad is what it implies about the kinds of human tasks Google sees AI replacing. Rather than using LLMs to automate tedious busywork or difficult research questions, “Dear Sydney” presents a world where Gemini can help us offload a heartwarming shared moment of connection with our children.

Inserting Gemini into a child’s heartfelt request for parental help makes it seem like the parent in question is offloading their responsibilities to a computer in the coldest, most sterile way possible. More than that, it comes across as an attempt to avoid an opportunity to bond with a child over a shared interest in a creative way.

Idk, I mean I think this is more honest and practical LLM advertising than what we’ve seen before

I like to say AI is good at what I’m bad at. I’m bad at writing emails, putting my emotions out there (unless I’m sleep deprived up to the point I’m past self consciousness), and advocating for my work. LLMs do what takes me hours in a few seconds, even running locally on my modest hardware.

AI will not replace workers without significant qualitative advancements… It can sure as hell smooth the edges in my own life

You think AI is better than you at putting your emotions out there???

Talking to a rubber duck or writing to a person who isn’t there is an effective way to process your own thoughts and emotions

Talking to a rubber duck that can rephrase your words and occasionally offer suggestions is basically what therapy is. It absolutely can help me process my emotions and put them into words, or encourage me to put myself out there

That’s the problem with how people look at AI. It’s not a replacement for anything, it’s a tool that can do things that only a human could do before now. It doesn’t need to be right all the time, because it’s not thinking or feeling for me. It’s a tool that improves my ability to think and feel

well I am pretty sure Psychologists and Psychiatrists out there would be too polite to laugh at this nonsense.

Precisely, you are giving it a TON more credit than it deserves

At this point, I am kind of concerned for you. You should try real therapy and see the difference

Psychiatrists don’t generally do therapy, and therapists don’t give diagnoses or medication

Therapy is a bunch of techniques to get people talking, repeating their words back to them, and occasionally offering compensation methods or suggesting possible motivations of others. Telling you what to think or feel is unethical - therapy is about gently leading you to the realizations yourself. They can also provide accountability and advice, but they don’t diagnose or hand you the answer - people circle around their issues and struggle to see it, but they need to make the connections themselves

I don’t give AI too much credit - I give myself credit. I don’t lie to myself, and I don’t have trouble talking about what’s bothering me. I use AI as a tool - these kinds of conversations are a mirror I can use to better understand myself. I’m the one in control, but through an external agent. I guide the AI to guide myself

An AI is not a replacement for a therapist, but it can be an effective tool for self reflection

I get what they mean. It can help you articulate what you’re feeling. It can be very hard to find the right words a lot of the time.

If you’re using it as a template and then making it your own then what’s the harm?

It’s the equivalent of buying a card, not bothering writing anything on it and just signing your name before mailing it out. The entire point of a fan letter (in this case) is the personal touch, if you are just going to take a template and send it, you are basically sending spam.

I am 100% for this if it’s yet another busywork communication in the office; but personal stuff should remain personal.

This is the same reason people think giving cash as a valentine’s gift is unacceptable LOL

Yeah I agree if you send it without doing any kind of personalisation. I think LLM shine as a template or starting point for various things. From there it’s up to the user to actually make it theirs.

exactly… AI used as a template factory would be good use.

The problem here (with the commercial in question) is that they present it as Gemini being able to write a proper fan letter that is not even prompted by the fan (it’s the dad for some reason)… THAT is what makes it incredibly cringey

And to state the obvious; of course it would be helpful for anyone with a learning or speech disability, nobody in their right mind would complain about a “wheelchair doing all the work” for a person who cannot walk.

That can be, in many cases, because you don’t read enough to have learned the proper words to express yourself. Maybe you’re even convinced that reading isn’t worth it.

If this is the case, you don’t have anything worth saying. Better stay silent.

I think it can be, if you know how to use it

It literally cannot since it has zero insight to your feelings. You are just choosing pretty words you think sound good.

The future will be bots sending letters to bots and telling the few remaining humans left how to feel about them.

The old people saying we have lost our humanity will be absolutely right for once.

The choices you make have to be based on some kind of logic and inputs with corresponding outputs though, especially on a computer.

Sure, the ones I make… the ones the “AI” makes are literally based on statistical correlation to choices millions of other people have made

My prompt to AI (i.e. write a letter saying how much I love Justin Bieber) is actually less personal input, and value, than just writing “you rock” on a piece of paper… no matter what AI spews.

This would be OK for busywork in the office. The complaint here is not that AI is an OK provider of templates, the issue is that it pretends an AI generated fan mail, prompted by the father of the fan (not even the fan themselves) is actually of MORE value than anything the daughter could have put together herself.

Yes, but this is also its own special kind of logic. It’s a statistical distribution.

You can define whatever statistical distribution you want and do whatever calculations you want with it.

The computer can take your inputs, do a bunch of stats calculations internally, then return a bunch of related outputs.

Yes, I know how it works in general.

The point remains that, someone else prompting AI to say “write a fan letter for my daughter” has close to zero chance to represent the daughter who is not even in the conversation.

Even in general terms, if I ask AI to write a letter for me, it will do so based 99.999999999999999% on whatever it was trained on, NOT me. I can then push more and more prompts to “personalize” it, but at that point you are basically dictating the letter and just letting AI do grammar and spelling

Again, you completely made up that number.

I think you should look up statistical probability tests for the means of normal distributions, at least if you want a stronger argument.

I’d view it as an opportunity for AI to provide guidance like “how can I express this effectively”, rather than just an AI doing it instead of you in an “AI write this” way.

That’s true too, it can give you examples to get you started, although it can be pretty hit or miss for that. Most models tend to be very clinical and conservative when it comes to mental health and relationships

I like to use it to actively listen and help me arrange my thoughts, and encourage me to go through with things. Occasionally it surprises me with solid advice, but mostly it’s helpful to put things into words, have them read back to you, and deciding if that sounds true